Representation Learning MSc course 2023: videos and materials

This page includes information about the taught course of Representation Learning, designed by Nikolas Adaloglou and taught along with Felix Michels in the summer semester of 2023 at the University of Dusseldorf, Germany.

Overview

This course was tailored for MSc students of the AI and Data Science Master of the Heinrich Heine University of Dusseldorf. However, with some essential background in deep learning, you should be able to follow most of the topics covered in the lectures and exercises.

All the course materials, including lectures, slides, and exercise classes, are free and publicly available. Start from GitHub or Youtube

Below, we present a summary of the topics covered each week.

Week 1 - Introduction to Representation Learning

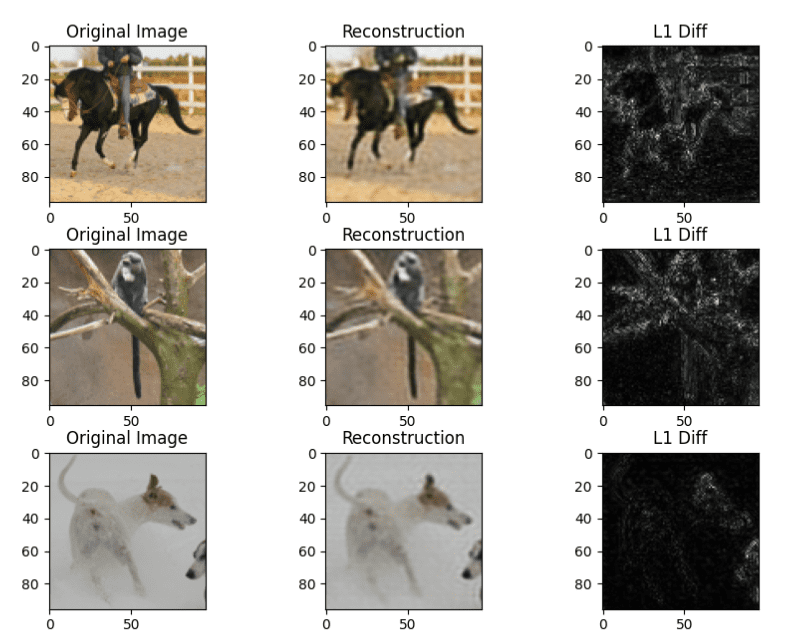

In the 1st lecture, we present a very general introduction starting from autoencoders for representation learning and early/old traditional approaches based on the infamous Bengio et al. 2012 paper. The exercise includes training an image autoencoder. An autoencoder (AE) is a neural network that learns to reconstruct its input data by compressing the input into a lower-dimensional representation first, which is called a bottleneck or latent representation. Autoencoders can be used for representation learning by leveraging a trained encoder as a feature extractor (feature refers to any transformation of the input data). Moreover, you need to implement linear probing, the typical method to evaluate the (intermediate) learned representations.

Week 2 - Overview of visual self-supervised learning methods

This lecture focuses on distinguishing self-supervised learning from Transfer Learning. We define pretext versus downstream tasks and other commonly used terminology. The most common early vision-based self-supervised approaches are covered: image colorization, jigsaw puzzles, image inpainting, shuffle and Learn, classify corrupted images, rotation prediction, and video-based techniques. We briefly discuss similar semi-supervised learning approaches (consistency loss) and briefly introduce contrastive learning (infoNCE).

In this week’s exercise, we will train a ResNet18 on rotation prediction. Rotation prediction provides a simple yet effective way to learn rich representations from unlabeled image data. The basic idea behind rotation prediction is that the network is trained to predict the orientation of a given image after it has been rotated by a certain angle (e.g., 0°, 90°, 180°, or 270°). Below are the expected results:

Results from rotation prediction. Image by author.

Results from rotation prediction. Image by author.

Week 3 - BERT: Learning Natural Language Representations

This week’s lecture covers the basics of Natural Language Processing (NLP), RNN, attention and Transformer recap, and language pretext tasks for representation learning in NLP, with an in-depth look into BERT.

In this exercise, you will train your own small BERT model on the IMDB dataset. You will then use the model to classify the sentiment of movie reviews and the sentiment of sentences from the Stanford Sentiment Treebank (SST2).

Week 4 - Contrastive Learning, SimCLR, and mutual information-based proof

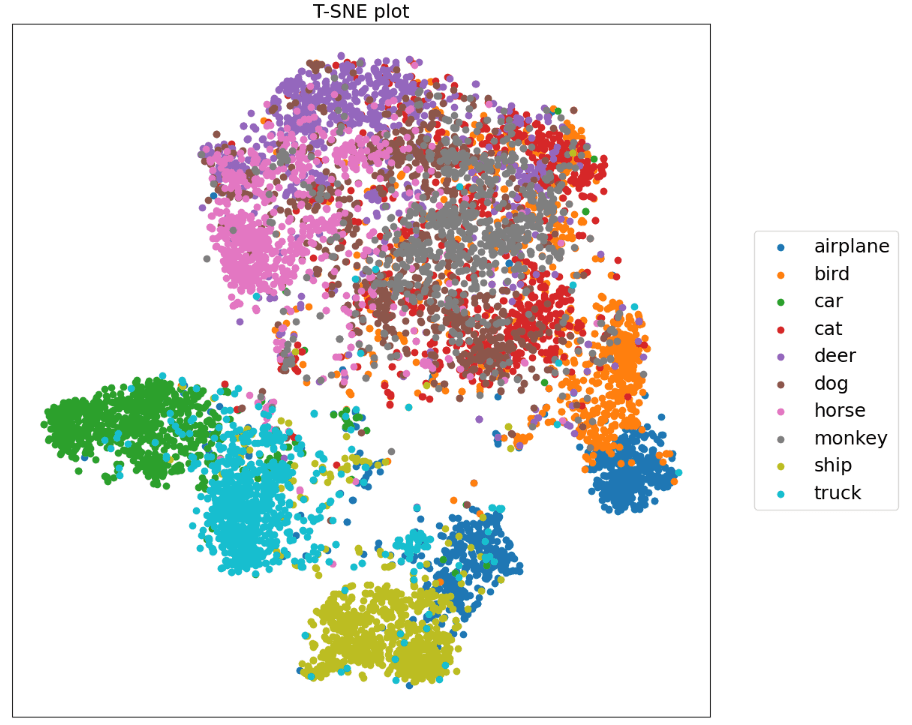

This lecture is heavily focused on the theory and math behind contrastive learning. Notes are also available. We look deeply into contrastive learning and its theory and provide proof of the mutual information (MI) bound. The exercise consists of building and training a SimCLR resnet18 on CIFAR10.

T-SNE visualization of the learned representations from SimCLR.

T-SNE visualization of the learned representations from SimCLR.

Week 5 - Understanding Contrastive learning & MoCO and image clustering

This lecture covers:

Contrastive Learning, L2 normalization, Properties of contrastive loss

Momentum encoder (MoCO). Issues and concerns regarding batch normalization

Deep Image Clustering: task definition and challenges, K-means, SCAN, PMI, and TEMI

MoCO implementation is provided as a reference.

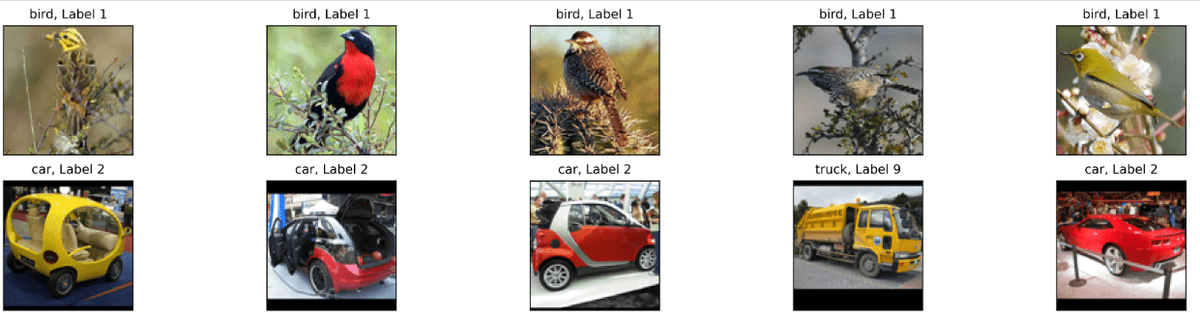

The corresponding exercise is leveraging a pretrained MoCO ResNet50 for image clustering with SCAN and PMI (pointwise mutual information). Both methods rely on the idea that nearest neighbors in the feature space learned from visual self-supervised learning tend to share the same semantics.

k-NN illustration for 2 image samples (right) and their retrieved neighbors.

k-NN illustration for 2 image samples (right) and their retrieved neighbors.

Week 6 - Vision Transformers and Knowledge Distillation

This lecture covers:

Transformer encoder and Vision transformer

ViTs VS CNNs: receptive field and inductive biases of each architecture

Introduction to knowledge distillation and the mysteries of model ensembles

Knowledge distillation in ViTs and masked image modeling

Exercise: Knowledge distillation on CIFAR100 with Vision Transformers.

Week 7 - Self-supervised learning without negative samples (BYOL, DINO)

This lecture covers:

A small review of self-supervised methods

A small review of knowledge distillation

Self-supervised learning & knowledge distillation

An in-depth look into the DINO framework for visual representation learning

In this week’s exercise, you will implement and train a DINO model on a medical dataset, the PathMNIST dataset from medmnist, consisting of low-resolution images of various colon pathologies.

Week 8 - Masked-based visual representation learning: MAE, BEiT, iBOT, DINOv2

This lecture covers the following papers: MAE Masked Autoencoders Are Scalable Vision Learners, BEiT: BERT-style pre-training in vision, iBOT: Combining MIM with DINO, DINOv2: Learning Robust Visual Features without Supervision. No exercise was given to the students for this week.

Week 9 - Multimodal representation learning, robustness, and visual anomaly detection

In this lecture, the following topics are being discussed:

Defining Robustness and Types of Robustness

Zero-shot and Few-shot learning

Contrastive Language Image Pretraining (CLIP)

Image captioning

Visual anomaly detection: task definition

Anomaly detection scores and metrics (AUROC)

The exercise is focused on using a pre-trained CLIP model for out-of-distribution detection using different scores and approaches.

Week 10 - Emerging properties of the learned representations and scaling laws

This lecture was one of my favorites to give. The following topics are covered:

Investigating CLIP models and scaling laws

Determine the factor of success of CLIP.

How does CLIP scale to larger datasets and models?

OpenCLIP: Scaling laws of CLIP models and connection to NLP scaling laws

Robustness of CLIP models against image manipulations

Learned representations of supervised models: CNNs VS Vision Transformers (ViTs), the texture-shape bias

Robustness and generalization of supervised-pretrained CNNs VS ViTs

Scaling (Supervised) Vision Transformers

Week 11 - Investigating the self-supervised learned representations

In this lecture, the following topics are being discussed:

Limitations of existing vision language models

Self-supervised VS supervised learned feature representations

What do vision transformers (ViTs) learn “on their own”?

MoCOv3 and DINO: combining ViTs with self-supervised learning

Self-supervised learning in medical imaging

Investigating the pre-training self-supervised objectives

No exercise takes place this week.

Week 12 - Representation Learning in Proteins

This lecture was quite multi-disciplinary, combining the advancements from NLP to biology and proteins.

A closer look at the attention mechanism, focusing on Natural Language Translation

A tiny intro to proteins (watch the relevant playlist on YouTube)

Representing protein sequences with Transformers: BERT masked language modeling versus GPT-like pretraining

Analyzing and investigating the attention maps of a pre-trained Transformer language model

The exercise is about using a pretrained Protein Language Model.

Conclusion

Since this is our first time designing and teaching Representation Learning, some mistakes may exist. Important: Solutions to the exercises are not provided, but you can cross-check your results with the expected results in the notebook. If you encounter any issues, open an issue on GitHub.

We gathered and summarized helpful material to help you if you plan to work on those topics. More advancements will come through the years in representation learning, but the principles discussed and taught during this course should give you an excellent reference point for catching up with the latest state-of-the-art methods.